When an AI Agent handles thousands of customer conversations daily, even a small mistake—such as sharing the wrong information or exposing sensitive data—can escalate quickly. As contact centres adopt automation to improve efficiency and personalisation, maintaining oversight and governance has become a critical operational focus.

According to Gartner’s 2025 report, by 2028, nearly one in four enterprises may experience significant business disruptions due to weak governance and the absence of proper guardrail controls in their conversational AI systems. This projection underscores the growing need for structured frameworks that ensure AI Agents operate safely, ethically, and in full compliance with regulatory requirements.

Security guardrails for AI provide that structure. They help organisations manage risks such as data leakage, misinformation, and bias while keeping automated systems aligned with corporate and legal standards. In the following sections, let’s explore why unsecured AI Agents can expose organisations to operational and compliance risks, how guardrails mitigate these challenges, and how Nubitel AI Agents integrate these governance layers to ensure safer and more reliable customer interactions.

Table of Contents

ToggleIntroducing Guardrails for AI Agents for Ensuring Safety and Reliability in Contact Centers

AI guardrails are structured governance measures that keep AI Agents aligned with enterprise, legal, and ethical requirements. They function as practical controls, ensuring that intelligent applications operate within predefined limits set by corporate governance and compliance frameworks.

Unlike traditional software systems, AI Agents such as chatbots or virtual assistants interact directly with customers in natural language, often handling confidential information or even legally sensitive requests. This conversational capability introduces new risks, including prompt injection, data leakage, bias propagation, and hallucinated responses. Standard IT or cybersecurity controls alone are not sufficient to manage these risks.

By implementing AI guardrails, enterprises can ensure that every automated interaction remains accurate, compliant, and consistent with business policies.

The Problem with Unsecured AI Agents without Guardrails Implication

When AI Agents operate without proper guardrails, the consequences extend far beyond technical errors. They can compromise brand reputation, customer satisfaction, and even regulatory compliance. In customer service, a single misleading or inaccurate response can erode trust, lead to financial penalties, or spark public backlash.

In 2024, an incident involving Air Canada’s online chatbot demonstrated the consequences of unguarded AI. The chatbot incorrectly informed a customer about refund eligibility for bereavement travel. Relying on this information, the customer filed a complaint, and a tribunal ordered the airline to pay about 800 US dollars in compensation.

The issue was not due to a system malfunction but rather the absence of content validation. A single accuracy guardrail verifying the AI Agent’s response against official policy could have prevented the misinformation.

Without proper governance and guardrails, AI Agents will make several key risks to customer engagement:

1. Underwhelming customer experience

When Virtual Assistants produce generic, inaccurate, or inconsistent responses, customers quickly lose confidence and disengage from automated service.

2. Vulnerable private data

Insufficient control over how AI Agents process and store data can expose personal or corporate information, violating data protection laws.

3. Compromised brand reputation

Misinformation or biased responses generated by AI Agents can damage brand credibility and require costly remediation efforts.

4. Weak reasoning and logic

Without guardrails enforcing validation and reasoning checks, AI Agents may misinterpret complex customer issues, leading to ineffective or misleading resolutions.

These implications show why businesses must view guardrails not as optional features but as an integral layer of AI governance.

Explore Guardrails for Your Safer Customer Interactions

AI guardrails function as layered protections that manage the flow, quality, and compliance of information generated by intelligent systems. Each category focuses on specific vulnerabilities from data security to accuracy and ethical alignment. Implementing these measures helps businesses maintain trust while enabling automation at scale.

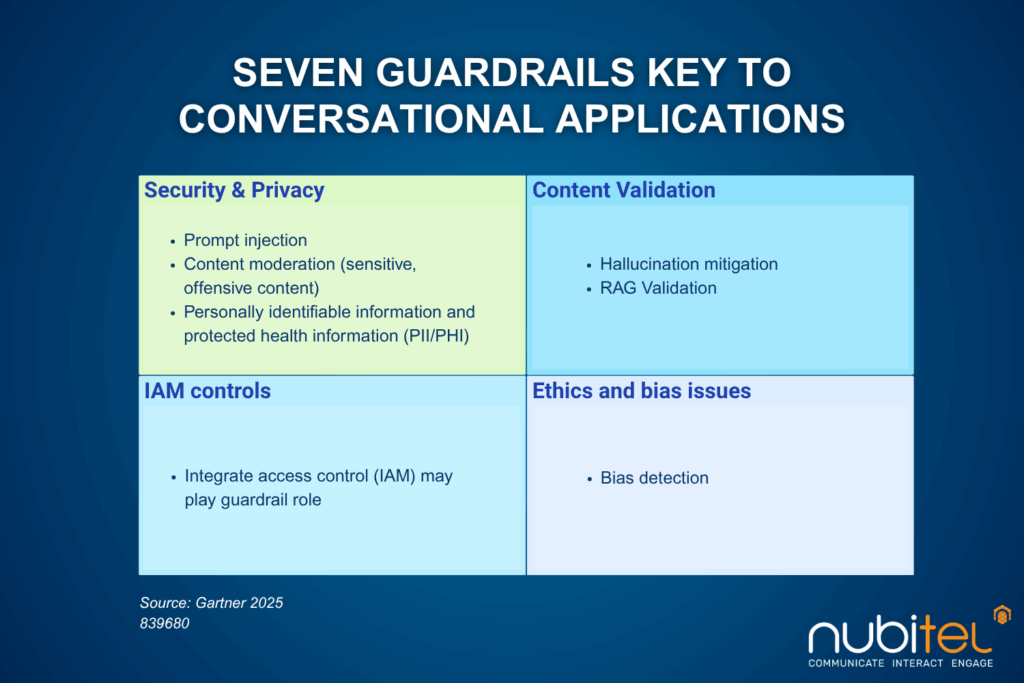

These seven categories cover the fundamental dimensions of AI reliability.

- Security and privacy guardrails protect sensitive data from leaks and unauthorized use.

- IAM controls manage who can access or modify AI data sources.

- Ethical and biased guardrails detect discriminatory patterns and maintain fairness.

- Content validation guardrails prevent AI from generating false, irrelevant, or contradictory responses.

By integrating these controls, enterprises can ensure that their AI agents remain transparent, auditable, and dependable across all communication channels. Below, let’s look at how contact centres can operationalise these principles with trusted AI solutions.

Why Guardrail-Enabled Nubitel AI Agents Are the Next Step for Modern Contact Centers

As organisations look to strengthen AI governance within customer service, practical implementation becomes the next focus. This involves integrating guardrails that manage data protection, accuracy, and compliance throughout AI Agent operations.

Nubitel incorporates these principles directly into its AI Agent solutions, embedding guardrails that help enterprises maintain governance and reliability in real-time customer interactions. Below are several key areas supported by this approach:

Safe and Consistent Experiences

By aligning with organisational policies and compliance frameworks, Nubitel AI Agents maintain response accuracy and protect customer data across diverse communication channels.

Operational Efficiency and ROI

Integrated guardrails enhance service performance by improving first-contact resolution, reducing handling time, and increasing automation efficiency, leading to measurable operational improvements.

Secure System Architecture

Access management, encryption, and continuous monitoring strengthen data protection and ensure system integrity across all AI Agent-driven processes.

With these layers in place, Nubitel enables enterprises to deploy AI Agents confidently while maintaining full compliance with regional data privacy standards and internal governance protocols.

Conclusion

AI continues to redefine customer service efficiency, but its success depends on how responsibly it is managed. Implementing guardrails for AI Agents allows organisations to harness innovation while maintaining control over compliance, security, and fairness.

Guardrails are not limitations but structured pathways that make automation sustainable. By integrating responsible AI practices today, businesses can scale customer engagement safely, uphold brand integrity, and build long-term trust with every digital interaction.